Tutorial: Game Performance Tuning with Cocos Creator

Parameter index

Both the engine and mini-games have a performance panel, which exposes the following performance indicators to developers:

-

Frame time (ms)

The time of each frame. RAIL model recommends making each frame of the animation in 10 milliseconds or less. Technically speaking, the maximum budget per frame is 16 milliseconds (1000 milliseconds/60 frames per second ≈ 16 milliseconds), but the browser needs about 6 milliseconds to render each frame, so 10 milliseconds or less per frame is recommended. -

Framerate (FPS)

Frame rate, also called frames per second (FPS: Frames Per Second), refers to the number of frames transmitted per second of the picture, and generally refers to the number of pictures of animation or video; the more frames per second, the smoother the action will be. For example, the frame rate of a movie is 24, which means that 24 pictures need to be played in 1s, but in fact, the lowest FPS that most people can accept during the game is about 30Hz. The higher the frame rate is, the better, because the graphics card processing capacity is equal to the resolution × refresh rate, in the case of the same resolution, the higher the frame rate, the amount of data processed by the GPU will also increase sharply, causing lag. Similarly, the resolution is not as high as possible. These three related parameters will also be displayed under the performance panel of some terminals: rt-fps (runtime fps): real-time frame rate; ex-fps: limit frame rate; min-fps: minimum frame rate. -

Drawcall

The CPU and GPU work in parallel. There is a command buffer between them. When the CPU needs to call the graphics programming interface, it will add commands to the command buffer. When the GPU completes the last rendering command, it will continue to execute the next command from the command buffer. There are many commands in the command buffer.drawcallis one of them. The CPU needs to process a lot of things when submitting adrawcall. This includes such things as some data, status, commands, etc. Some rendering stalls are caused by the GPU rendering speed faster than the submission speed ofdrawcall. Maybe the last rendering is finished and the CPU still is calculating thedrawcall. The performance bottleneck ofdrawcalllies in the CPU. The most effective way to optimizedrawcallbatch rendering is to merge a large number of smalldrawcallinto a bigdrawcallto reduce the number ofdrawcall. -

Tris and Verts

TrisandVertsare the number of triangles and vertices to be rendered. There are only three basic primitives in webgl: points, line segments and triangles. No matter how complex the model is, these three basic graphs are essentially composed of vertices. The GPU draws these points into tiny planes with triangular primitives, and then connects these triangle rows to draw it. All kinds of complicated objects are drawn this same way.Generally speaking, the lower the number of vertices and triangles of the model, the lower the complexity of the model. Therefore, these two parameters are importnat in the 3D models. For example, here are two scenarios. In the first scenario, there are 1000 objects, each with 10 vertices, and in scenario two, there are 10 objects, each with 1000 vertices. Which scenario has better performance? First of all, we must understand that GPU rendering speed is very fast. Rendering triangle primitives composed of 10 vertices and triangle primitives composed of 1000 vertices is usually the same, so there are fewer drawcalls in these two situations. Second, the performance is better. Of course, if you do some special processing on the vertices in the

shader, such as complex calculations, then you have to weigh the impact of these two indicators.

Practice

Lower DrawCall

If you want to reduce the drawcall, you must start with the factors that affect the rendering state, such as texture images, texture rendering mode, Blend method, and so on. In most projects, developers don’t actually have much need to modify the default rendering parameters of the engine separately. If you do it, it will definitely break batching operations.

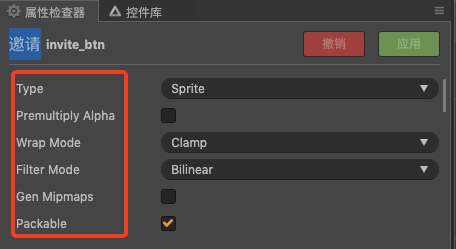

Therefore, in most cases, the biggest reduction or of drawcall in a project is actually to use the static and dynamic image combining functions provided by the engine. The static combined image is the automatic atlas, or use the third-party atlas tool TexturePacker to merge the scatter maps in the resources, and try to make the nodes in the screen use one atlas. This is because the texture of the same atlas, the states are the same, so it can meet the requirements of the rendering batch merging for the texture state. There are two dynamic pictures in the engine, one is the image resource, which is enabled by the engine by default. If you don’t want to use it, you can uncheck Packable in the panel, or turn off the global combined image switch. cc.dynamicAtlasManager.enabled = false;; one is for label, which can be done in the cache mode of label and switch between different modes. Below are some treatments and benefits of different names for drawcall in a project.

Manage nodes reasonably

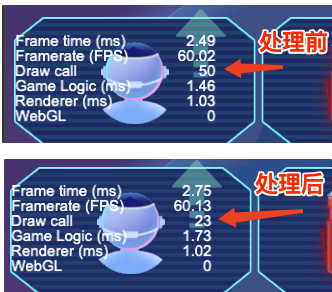

Nodes, outside the screen, can be removed directly, and drawcall has been reduced from 50 to 23:

Set label’s cache mode reasonably

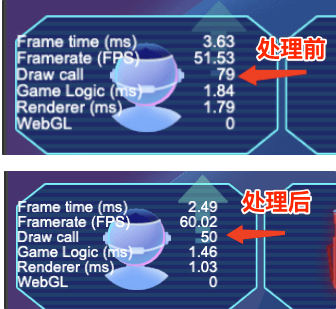

Change the cache mode of the three labels on the homepage to bitmap, and reduce the drawcall on the homepage from 79 to 50:

There are some frequently changed label objects, when the cache mode is changed to char, the difference is not very noticeable on an Apple phone, but the fluency is very obvious on an Android phone.

Merging an Atlas

When merging an atlas, it needs to be divided according to the content of the screen. Try to pack the image resources for the same screen into an atlas. Take a middle and rear level in the game as an example (the screen nodes of the previous level are too few and the difference is not obvious), the average value of drawcall dropped from 190 to 90, and the peak value of drawcall dropped from 220 to 127.

Troubleshooting issues with Performance

During development, the alias will use the DevTools of Chrome. If you need to block all browser plug-ins to troubleshoot performance issues in the browser, it is best to turn on the privacy mode for debugging, because the plug-ins will run in the background. This may cause interference.

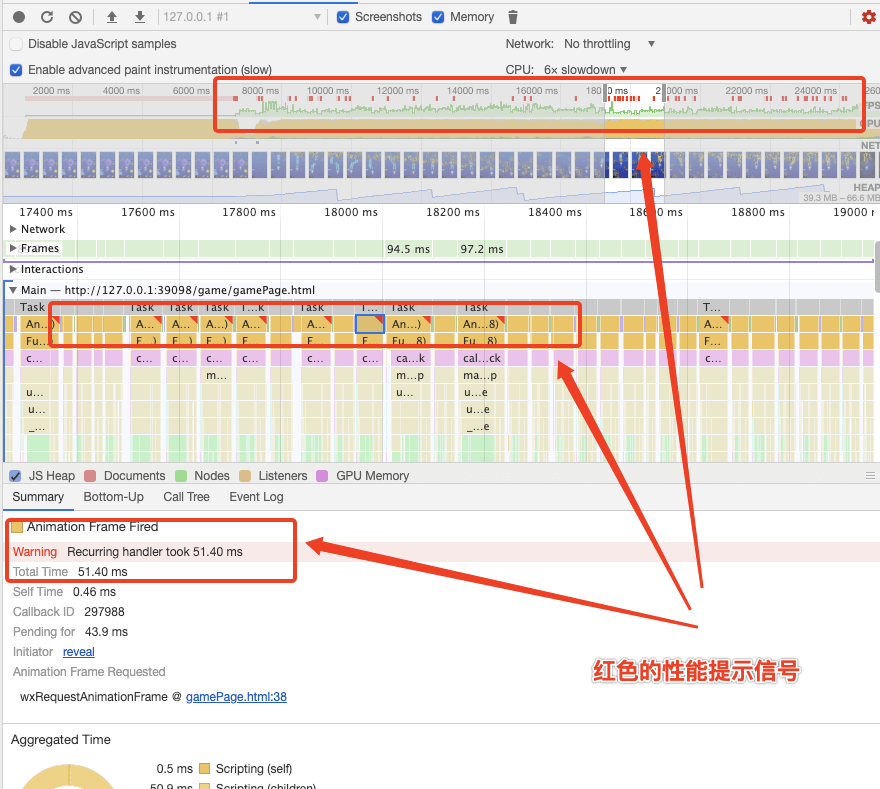

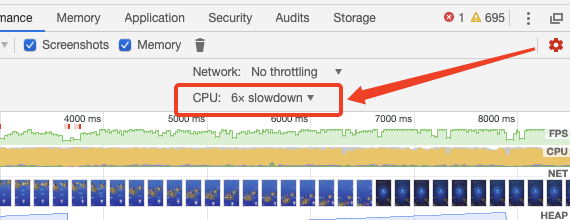

Compared with mobile phones, the CPU of desktop computers is very fast. In order to simulate the user’s hardware as much as possible, the CPU needs to be throttled. For example, when the 6x slowdown is selected, he calculation speed of the local CPU is 6 times lower than normal.

At this time, when we re-generate the recording results, we can find that a striking red warning message has appeared on the panel:

Recurring handler

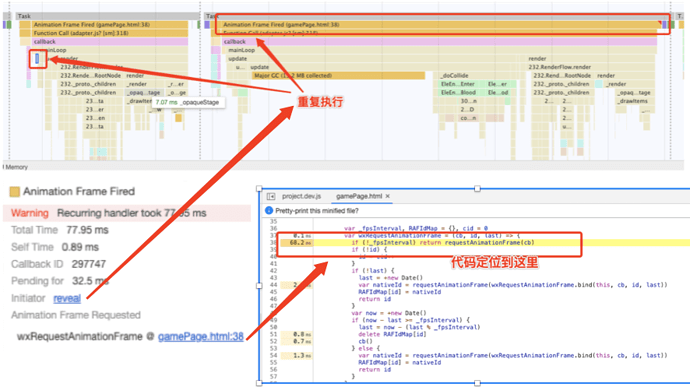

Focus, zoom in and locate the axis to each small warning message. You can see the warning message given by the browser in the Summary. The warning messages inside are all the same, they are all Recurring handler, and it appears regularly, you can use the Initiator to check the repeated occurrences and the specific execution code:

Although we have seen that the specific location of code execution is requestAnimateFrame, this api call is not created by the developer, but rather a package call of the engine. The frame callback of the engine should be implemented with requestAnimateFrame, that is to say in There may be the logic of repeated calls in the update hook, which needs further analysis here. We need to find and implement the specific business code call details step by step according to the flame graph in the Main panel, which is the JavaScript call stack. If we can locate the call function in our logic, we can solve it immediately by prescribing the right medicine. But in this project with a different name, the information given by Recurring handler is difficult to locate. The call stack below task calls the rendering method of the engine itself. Of course, if you look at the sequence of calls horizontally, it is in touchMove Run after the event, this is a troubleshooting direction, you need to analyze your own code running calls. If you encounter a situation like the synonymous, the console can only locate a bunch of engine rendering functions, but cannot clearly locate our specific business logic. The synonymous will suggest to put it away because of repeated rendering. The problem may be fixed during the splitting of long task.

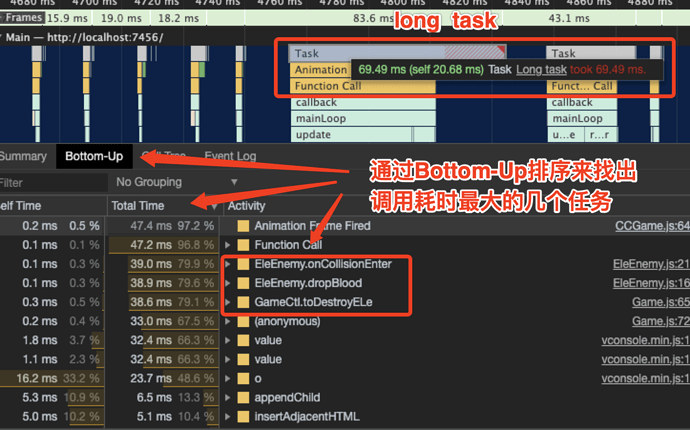

Long task

It can be generally understood that JavaScript logic with a long execution time is a long task. “Long task” occupies the main thread. Even if our page looks ready, it cannot respond to user operations and clicks. As for how long this execution time is considered long? RAIL model suggests that each task should be controlled within 50 milliseconds. Chrome also gives a prominent long task prompt in the console:

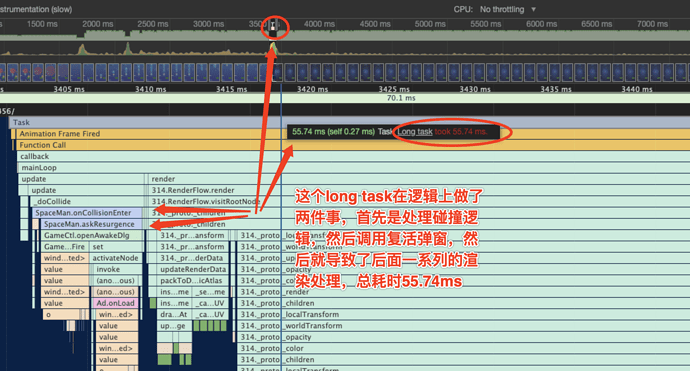

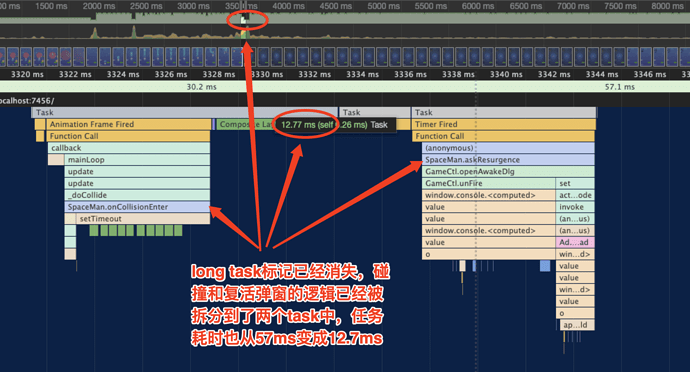

Large scripts are the main reason for long tasks. Here is a simple example of splitting long tasks in a project with different names. There is a resurrection logic in the game with a different name. The blood volume and related blood drop interactions will be processed in the collision callback. Then when the player’s blood volume is exhausted, a resurrection pop-up window will be called up, and this logic will be generated. A long task takes 55.74ms, and the flame diagram is as follows:

Analyze carefully, although my collision logic processing and evoking the resurrection pop-up window are called sequentially, there is a clear logical boundary between them, and the function askResurgence is a function that evokes the UI pop-up window, since it has been generated Performance constraints, we will do a task split here:

// Wrap it with setTimeout and place it in the next macro

// task execution

setTimeout(() => {

this.askResurgence();

})

At this time we look at the split flame graph again, the long task mark has disappeared. Originally a long task was split into 3 tasks (the middle one is GC), and the time-consuming of the three tasks is added. Compared with the long task at the beginning, it is half. After sorting out the synonymous names, it was found that some very clear UI state transitions are likely to cause long tasks. There are several other projects with synonymous names that have such a clear and mixed logic. When you encounter performance pressure, you may be able to Do something like me.

To sum up, when disassembling large scripts, we first need to reorganize a large section of js logic. We can disassemble some states that can be advanced or delayed to the idle stage of our application to initialize or change, such as the game on the home page. The data needed in the process is loaded in, and there is no need to load this part of the data in the logic of the game process, but this is a relatively “macro” logical change, but in most cases our state and logical changes are difficult Early or late. We can also do some logic splits with smaller time granularity, which is to combine the event loop mechanism of js to process our logic, such as using Promise.then or setTimeout to delay the task, or even create a Task queue to do event caching, etc. This article idle-until-urgent introduces some more specific dismantling script measures. Task splitting is risky. Whether it is to improve or delay logic at the application level, or use js microtasks or macrotasks to delay state logic, it may cause problems with your application state synchronization. Remember to test the entire process after the actual operation.

Long tasks can also be optimized by streamlining their own logic. For example, in some loops, if you can jump out to determine whether you have done it. In some places, whether the logic you write is redundant or useless, such as in projects with different names. Interaction logic:

// Animation interaction with little effect, kill

this.node.setScale(1.2);

const frequency = getRandom(15, 40) / 100;

this.centerNode.runAction(

cc.sequence(

cc.scaleBy(frequency, 1.1),

cc.scaleTo(frequency, 1)

).repeatForever()

);

In the actual picture effect, this small change is actually very weak. It can be considered as a dispensable animation logic. Then we need to decisively treat it when doing performance optimization. After deleting the redundant logic, the value of game logic can be clearly reduced.

Other

At present, there are more obvious performance aspects: heat, easy to freeze when the number of enemies increases.

Heat is a relatively comprehensive problem. Generally speaking, the CPU causes heat, and reducing the CPU’s work will effectively reduce the heat. Stuttering is more affected by the frame rate and drawcall, usually the following optimization methods:

- Reduce the number of frames: currently the frame rate has been dynamically set, 60 frames during the game, 30 frames during non-game

- Reduce frame callbacks: There is still a lot of room for logic optimization in the update

- Reduce memory usage: This area currently has a lot of room for optimization, GC recycling, node pools, object and node reuse, caching, etc., and even reference release of some textures, etc.

- Drawcall optimization: In fact, some frame debugging tools can be used for further analysis. In the later stage of the project,

drawcalloptimization should be further explored.