How to implement a room and matching system with 100,000 people online? TSRPC full-stack framework and a new solution

This story comes from Cocos Star Writer, King Wang.

Introduction

At the end of March this year, Tencent Cloud announced that the game online battle engine MGOBE would be officially offline on June 1. Without MGOBE, what are the alternatives? Based on the open-source framework TSRPC, King, a TypeScript full-stack architecture expert, implements more complex multiplayer real-time online connections.

TSRPC is an RPC framework specially designed for TypeScript. After five years of iteration and verification of tens of millions of user projects, it has now entered the 3.x version.

Last year, our team used TSRPC + Cocos to make a multiplayer real-time battle game demo. After the article was published, many developers asked: How can this case be changed to support multi-room? How is the performance? How many users can be online at the same time? This time, based on the previous content, we will deeply analyze how to use TSRPC to implement the same room system and matching system of MGOBE, and use the distributed architecture to horizontally expand to support 100,000 people online at the same time.

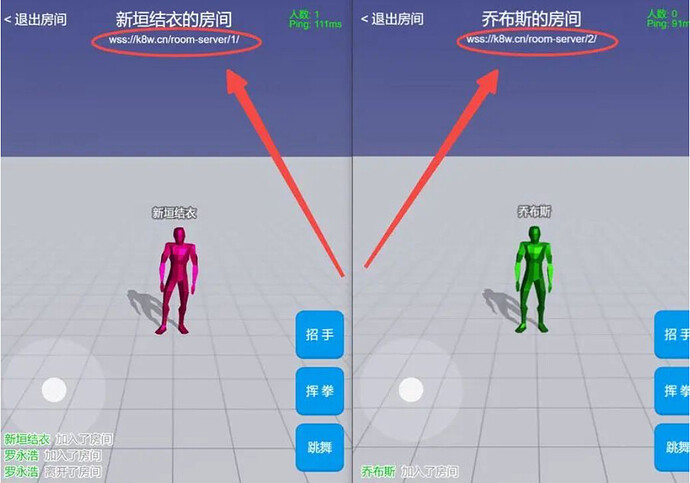

Demo effect preview

It is recommended that developers who have not read the previous article click here to read it first.

Demo source code and links are at the end of this article.

Cocos + TSRPC

Some people may wonder: so many back-end programming languages and old-fashioned development frameworks are on the market. Why use the combination of TSRPC + Cocos? Indeed, in the matter of technical selection, there is only a trade-off of pros and cons, and there is no absolute standard answer. From this point of view, the most significant advantage of the combination of TSRPC + Cocos over other solutions is simplicity! Simple! Simple!

How simple is it? As a result, it was initially recommended to back-end developers, but as a result, many front-end developers accidentally became full-stack… The idea of “Simple” is mainly reflected in the following aspects:

- Full-stack TypeScript. The front-end and back-end use one language, and there is no learning cost in a programming language. It is very convenient to reuse code across ends, especially for multiplayer games. The server shares the front-end game logic to complete some verification and judgment, don’t be too rigid~

- Multi-protocol. It supports HTTP and WebSocket at the same time, and the transmission protocol has nothing to do with the architecture. Regardless of long connection or short connection, it is enough to learn a framework. By the way, TSRPC plans to support UDP in 2022, including Web (WebRTC) and applet platforms. With UDP, will the real MOBA of small games be far behind? Now start development with WebSocket first, then switch UDP seamlessly!

- Runtime type detection and binary serialization. TSRPC has the world’s only TypeScript runtime type detection and binary serialization algorithm. It can directly implement binary serialization transmission and runtime type detection of business types without introducing Protobuf.

- Free and open source. TSRPC is free and open-source, and you can get the complete code with comments and documentation, fully deployed on your own server. At the same time, it follows the MIT protocol, meaning you can modify and repackage it at will.

After understanding the features and advantages of Cocos + TSRPC, let us enter today’s topic.

What you need

Let’s take a look at our requirements first:

- Room system: support opening rooms and playing multiple games simultaneously.

- Matching system: support random matching, single row, and team matching.

- All regions and all servers: The user does not need to select a server, and the user perceives only one server.

- Horizontal expansion: When the user scale grows, the expansion can be completed by adding machines.

- Smooth capacity expansion: The capacity expansion does not affect the running services and does not require restart or downtime.

In the final demo, we can create rooms and random matching, you will find that it will automatically switch between multiple room services, but the user does not perceive it.

Distributed foundation

Load balancing

Deploy multiple copies

NodeJS is single-threaded, so generally, one service = one process = one thread. The available resources of a single-threaded service are limited, and only one CPU core can be used at most. As the user scale grows, it will soon become insufficient. At the same time, single-point deployment cannot satisfy high-availability protocols. How to do it?

The answer is actually quite simple: just deploy a few more copies!

You can start several processes on the same machine (to better utilize the performance of multi-core CPUs), or you can deploy them on multiple servers. This way, you have numerous identical services, for example:

Next, you need to distribute client requests to various services, which we call load balancing.

Distribution strategy

Just like the literal meaning, the purpose of load balancing is to make your multiple servers achieve a relatively balanced state in terms of CPU, memory, network usage, etc. For example, you have two servers, server A uses CPU 90%+, and server B uses CPU 20%. This is definitely not the result we want.

Ideally, when the client’s request comes, it must be seen who has the least resource usage among all servers and distributed to whoever. You can even be more granular and refine the “load” metrics to business data, such as QPS, number of rooms, etc. But usually, for brevity, we use more round-robin or random distribution. This is sufficient for most business scenarios, and plenty of off-the-shelf tools are available. According to your needs, wealth and frugality are made by people.

Front proxy

Distributing connections and requests is essentially a proxy service, which can be implemented with many off-the-shelf tools, such as:

- PM2[1]

- Nginx[2]

- Alibaba Cloud SLB [3]

- Kubernetes[4]

PM2 is an excellent tool if you’re just deploying multiple processes on a single server.

npm i -g pm2

# -i is the number of processes started,max represents the number of CPU cores

pm2 start index.js -i max

Like this, you can launch multiple copies of index.js equal to the number of CPU cores you have. For NodeJS single-threaded applications, the number of processes = the number of CPU cores helps maximize performance.

The advantage of using PM2 is that your multiple processes can use the same port without conflict. For example, ten processes are listening on port 3000, and PM2 will randomly distribute requests as a front-end proxy.

If you are deploying on multiple servers, you can use Nginx upstream. If you want to worry about it, you can also directly use the load balancing services of cloud vendors, such as Alibaba Cloud’s SLB.

TIPS: If you need to use HTTPS, you can easily configure an HTTPS certificate in Nginx or the cloud vendor’s load balancer.

Of course, we recommend that you learn to use Kubernetes, which also solves the problem of service discovery - allowing you to scale up and down, as simple as clicking the plus and minus signs. Kubernetes can be said to be the general + ultimate solution at this stage. At present, mainstream cloud vendors provide Kubernetes managed clusters and even serverless clusters. The only downside is that it requires a certain learning cost.

session hold

Usually, we divide services into two categories: stateless services and stateful services.

For example, you have deployed two copies of an HTTP API service. Since they are only additions, deletions, and changes to the database, the request to connect to which service is the same. In other words, this time, the request connects to server A, and the next request connects to server B; there is no problem at all. We call such a service stateless.

The other situation is not the same. For example, if you have deployed 10 King of Glory room services, you connect to server A to play games in a specific room, and suddenly the network is disconnected. Then after disconnecting and reconnecting at this time, you must still need to connect to server A because half of the game room you played and your teammates (all status) are all on server A! This kind of service is what we call stateful.

Obviously, there will be a general requirement for stateful services: which server was connected to last time and will continue to be maintained next time. This feature is often referred to as “session persistence.”

It is a little troublesome to implement session retention. Nginx and cloud vendors’ load balancing support similar functions, but it is really not that convenient. In our practice, there is another more lightweight approach, which will be introduced in the specific scheme below.

The load balancing part is here first. To summarize, deploy multiple copies of a service to achieve horizontal expansion and high availability.

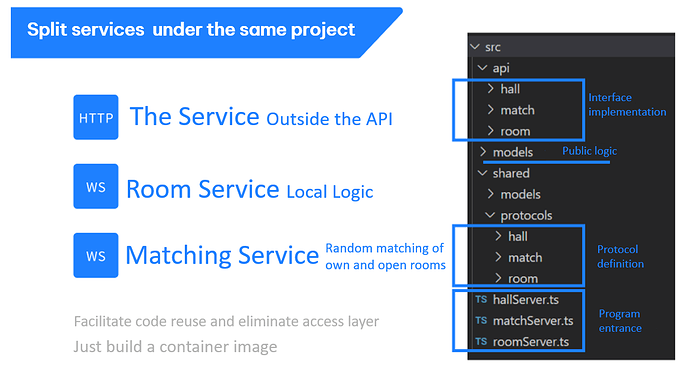

Split service

Next, we will introduce splitting services, that is, how to split a large service into multiple different small services.

Why split

For an application, we often split into several services (such as the popular microservice architecture). Why is this?

Among them, there are development considerations, such as facilitating the division of labor and the decoupling of project modules, splitting a large project with 200 interfaces into five small projects, each with 40 interfaces. At the same time, there are also runtime considerations. For example, different modules have different resource requirements. 100 real-time game room services can be deployed, but only five matching services can be deployed to achieve good planning and management of resources.

How to split

First, design which services you want to split according to your business, organizational structure, and runtime resource planning considerations. Then, there are two ways to choose:

- Split into different independent projects

- Split entry points under the same project

Generally speaking, projects are not entirely independent from project to project. A considerable part of code can be shared, such as database table structure definition, login authentication logic, public business logic, and so on.

If you choose to split into different projects, you need to consider sharing the code between the various projects. For example:

- Shared via Git Submodules

- Share via NPM

- Share via MonoRepo

- Automatically distribute code to multiple projects with Git pipelines

Of course, either way, it will introduce additional learning and maintenance costs. If your situation allows, we recommend that you split projects under the same project.

- First, split the protocol and API directory according to different projects.

- Split

index.tsinto multiple ones. - When developing, run each service independently. There are two options:

- Split into multiple

tsrpc.config.ts,npx tsrpc-cli dev --config xxx.config.ts - Only keep a single tsrpc.config.ts, specify the startup entry through the entry parameter:

npx tsrpc-cli dev --entry src/xxx.ts.

Splitting services under the same project has several advantages:

- Naturally, reuse code across projects without additional learning and maintenance costs.

- The operation and maintenance deployment cost is lower. You only need to build a program or container image to complete the deployment of each service (just modify the startup entry point).

Dynamic configuration

Finally, you can control the dynamic configuration of the runtime (such as the running port number, etc.) through environment variables to achieve flexible deployment of multiple services.

// Control configuration through environment variable PORT

const port = parseInt(process.env['PORT'] || '3000');

Set environment variables at runtime. Commands are different under Windows and Linux. At this time, you can use cross-platform cross-env:

npm i -g cross-env

cross-env FIRST_ENV=one SECOND_ENV=two npx tsrpc-cli dev --entry src/xxx.ts

cross-env FIRST_ENV=one SECOND_ENV=two node xxx.js

If you use PM2, you can also use its ecosystem.config.js to complete the configuration:

module.exports = {

apps : [

{

name : 'AAA',

script : 'a.js',

env: {

PORT: '3000',

FIRST_ENV: 'One',

SECOND_ENV: 'Two'

}

},

// More...

]

};

# Start up

pm2 start ecosystem.config.js

Core Architecture

Project structure

Split into the following services under the same project:

- Room service: WebSocket service for in-game room logic, stateful service.

- Matching Service: HTTP service for room creation, and random matching, is considered a stateless service (detailed below).

The room is essentially an aggregation of a bunch of Connections, encapsulating the room into a Class, managing the join/exit of Connections, and handling their message sending and receiving logic.

Matching, in essence, combines the information in the matching queue according to certain rules and then returns the result. So the matching operation is a request-response - when the request is made, the current user is added to the matching queue, and then the response is returned in the matching logic that runs periodically. So a fast HTTP connection is enough. Of course, you can set the timeout to be longer.

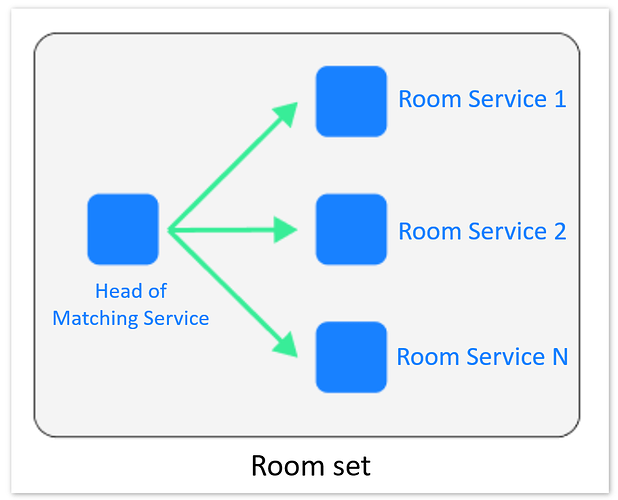

District-wide distributed architecture

Room group

Typically, room service requires more server resources, and matching service requires fewer server resources. Therefore, the matching and room services are designed to have a one-to-many relationship. One matching service manages the room creation and matching of multiple room services, which is regarded as a room group.

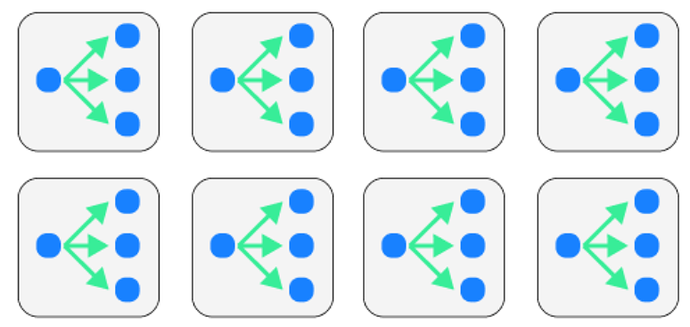

You can deploy one or more room groups to form a distributed room group according to actual needs.

No access layer service

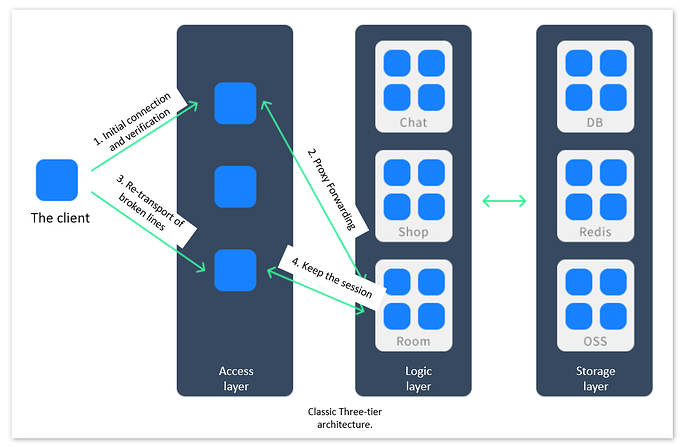

Does the room group look a bit like a large area in an online game? However, since our demand is for all servers in the whole region, we cannot let users perceive the server selection. The three-tier standard structure of the classic region-wide server is as follows:

In the access layer, operations such as authentication, proxy forwarding, and session retention are performed uniformly. Obviously, the access layer service is very important, and its development and maintenance also have certain complexity.

However, if we used WebSockets and opted to split the services under the same project, the architecture could be significantly simplified! We can directly set up access layer services.

Since it is under the same project, it is very easy for each service to share the access layer logic, such as authentication. For example, a login credential is generated after a user logs in, which can be used as a credential to access each service. Please refer to the Login state and authentication [5].

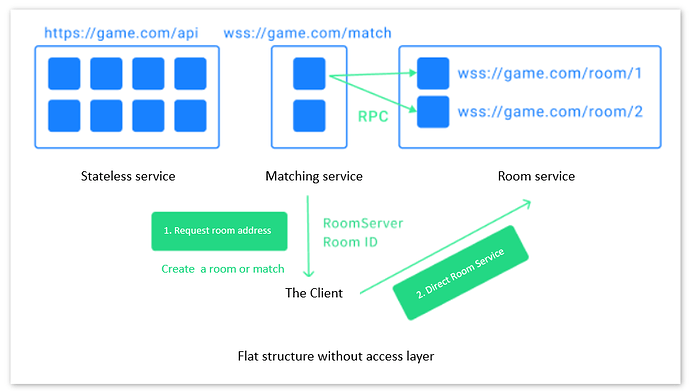

Since there is no access layer, there is no proxy forwarding, and different services can be directly connected through different URLs. For stateless services, multiple deployments can be exposed to the same URL through a load-balanced forward proxy. Because of the need for session persistence, stateful services expose them as different URLs.

In this way, we save complex access layer development and reduce the delay loss of intermediate proxies.

Inter-Service RPC

According to the above architecture, the matching service needs to know the real-time status of all its rooms to complete the matching logic. This requires RPC communication between the matching service and the room service. Don’t forget that TSRPC is cross-platform and can be implemented ideally using TSRPC’s NodeJS client.

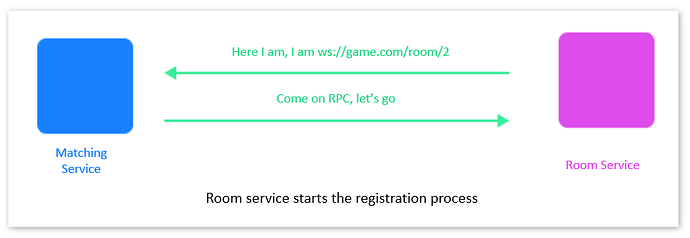

Since our requirement is smooth expansion, we do not need to restart existing services when adding services, so we need to implement a simple service registration mechanism by ourselves. The implementation of this solution is as follows:

- Before the room service starts, you need to specify the matching service URL to which it belongs through configuration. You can specify a uniform URL for the stateless services mentioned above, which will randomly select one among all matching services. Or, if you want to achieve more fine-grained control, bind a URL to each matching service separately and specify it according to your rules.

- After the room service starts, it actively registers with the matching service, and then the two establish a long WebSocket connection to open RPC communication.

- The room service periodically synchronizes real-time room status information with the matchmaking service.

- The matching service completes the room management by calling the room service and other CreateRoom.

Since the room service is a service and a URL, after startup, you need to update the configuration of the front-end proxy (such as Nginx or Kubernetes Ingress) and bind the corresponding URL to the current service. This process, of course, can also be done automatically through the program~ (not provided, you can implement it yourself or modify it manually).

Effect verification

Open room

The complete request process for opening a room is as follows:

- The client initiates a create room request to the matching service.

- The matching service selects one (such as the one with the least number of rooms) from the N room services it RPC serves, creates a room through RPC, and gets the room ID.

- Returns the room ID and URL address of the corresponding room service to the client.

- The client directly connects to the room service and joins the room.

- The client invites other friends to join, sending them the room service URL + room ID.

- Other friends can also directly connect to the room service to join the game.

It can be seen that even if there are multiple room groups, it will not affect the open room communication between players.

Random match

The complete request process for random matching is as follows:

- The client initiates a random matching request to the matching service.

- The matchmaking service adds the connection to the matchmaking queue.

- The matching service performs matching on a regular basis, selects a suitable room for the user based on real-time room information, and returns the room service URL + room ID.

- The client directly connects to the room service and joins the room through the room service URL + room ID.

Some developers may ask if there are multiple room groups, then the match is not equivalent to only matching with some players, and not all players match?

In fact, when you already need to use multiple room groups, it means that you already have a considerable player base. Matching does not require all players to match together. The number of users in a room group should already fully meet the necessary time to match.

In exceptional cases, for example, you need to match users into groups instead of mixing them into one room group. You can also bind different room groups to several different URLs for pre-distribution. All the work forwarded by the access layer proxy can be handed over to the URL.

Horizontal expansion and smooth expansion

Both room service and matching service can be scaled horizontally, and they all support smooth expansion:

- To increase the deployment of room service, you only need to configure and start the room service. According to the above service registration process, the matching service will automatically include it under its command without impacting the existing service.

- Adding deployment matching services is smooth and invisible, just like adding stateless services.

Therefore, as long as you have enough machines, other dependencies such as databases and Redis can hold up, and there is no problem with 100,000 people being online simultaneously.

When chatting with friends, they came to ask about the difference between TSRPC, this solution, and Pomelo. Pomelo is a very good framework. NodeJS implements many mechanisms such as access layer, service registration, discovery, and RPC between services. But today, there are new changes.

In terms of cluster management, a standardized solution such as Kubernetes has emerged, which is more professional, reliable, and high-performance in capacity expansion, service registration and discovery, URL routing, etc. Cloud vendors’ managed services and middleware are also improving…

A finer division of labor gave birth to more specialized tools, so today, not all work needs to be done by you in NodeJS yourself. Using these toolchains will allow you to focus your limited energy on the business and achieve more with less effort.

Resource links

• Download the Demo source code

https://store.cocos.com/app/detail/3766

• Demo online experience address

https://tsrpc.cn/room-management/index.html

• TSRPC Github

• TSRPC official documentation

More links

[1] PM2

[2] upstream

[3] Alibaba Cloud’s SLB

[4] Kubernetes

[5] Login status and authentication