An Introduction To Draw Call Performance Optimization

Community member Blake submitted this article. Based on his practical experience in the game industry for many years, he shares his experience in the performance optimization of DrawCall in Cocos Creator 3.x.

Introduction

Game rendering is the most significant performance overhead, and mastering rendering optimization skills is crucial for performance tuning. Rendering optimization can start from many aspects, among which reducing DrawCall is a vital requirement. This article will introduce why DrawCall should be reduced and how you can reduce DrawCall in Cocos Creator 3.x.

Content

-

Why reduce DrawCall

-

Principles and advantages and disadvantages of commonly used batch technology

-

How v3.x optimizes DrawCall for 3D objects

-

How v3.x optimizes DrawCall for 2D UI objects

Why reduce DrawCall

When the game engine draws a picture, it first needs to find the objects in the scene and then submit these objects to the GPU for drawing. Assuming that there are 100 objects to be drawn in the game scene when the game engine submits these 100 objects to the GPU for drawing one by one, the bottom layer of the engine will perform the following operations:

-

The CPU passes the data required for object rendering to the GPU (such as mesh models, material parameters, texture objects, world matrices, etc.).

-

After the CPU prepares the data, it issues a drawing command (Draw cmd) to the GPU.

-

The GPU starts rendering and renders the data submitted by the CPU to our display target.

Here are some concepts I need to first introduce to you:

-

Drawcall: The process in which the CPU submits data to the GPU and then issues rendering commands to the GPU is called DrawCall, also known as batch rendering.

-

The number of DrawCalls (batches): refers to the number of rendering commands (DrawCall) that the game engine needs to submit to the GPU to draw all objects in a game scene . If 100 objects are submitted to the GPU for drawing one by one, then 100 rendering commands must be submitted, and the number of DrawCalls is 100. It can also be understood as: objects in a game scene are divided into several batches for rendering.

-

Batching (reducing the number of DrawCalls): Combining several objects and submitting them to the GPU for rendering is called batching. For example, for 100 objects, if the first 50 objects and the last 50 objects are combined and submitted to the GPU for rendering, then the rendering of these 100 objects only needs to submit 2 batches, that is, 2 DrawCalls. Batching can reduce the number of DrawCalls. We usually say reducing DrawCalls refers to batching.

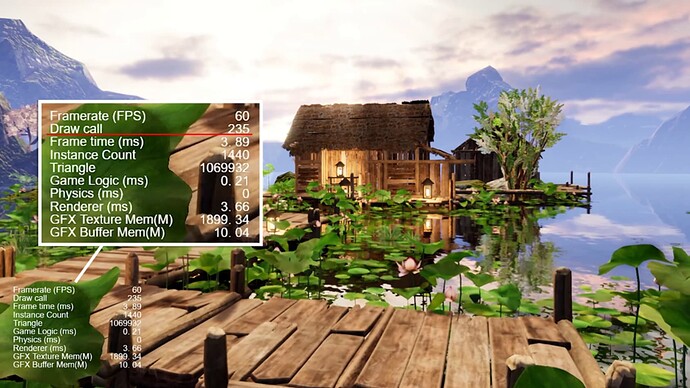

In Cocos Creator, we can see the current number of DrawCalls through debugging parameters.

The performance overhead caused by DrawCall is mainly in the command assembly on the CPU side. The more DrawCalls, the more commands need to be assembled, and the more CPU resources are consumed. Especially for low-end computers and iOS devices that cannot JIT, DrawCall has a great impact on performance.

Next, let’s analyze why batching (reducing DrawCall ) can improve rendering performance:

-

The CPU submits the objects that can be batched together to the GPU, avoiding repeated data submission, like multiple objects in a model. The overhead of submitting data from the CPU to the GPU is relatively large, which is greater than copying data from the CPU to the memory.

-

It takes 100 Draw Commands for 100 objects to be drawn one by one while submitting a batch of 100 objects to the GPU requires only one Draw Cmd, which means that the GPU only needs to be powered on once to complete the rendering.

-

The GPU has a throughput every time it starts drawing, and if we can submit as many faces of triangles as possible, the GPU can build more faces in one rendering. This is like a factory assembly line. It costs the same to produce 100 items as it does to produce 1 item, so try to get 100 at a time when scheduling orders. Therefore, submitting the rendering in batches can improve the throughput of the GPU and improve efficiency.

After the above analysis, it is not difficult to conclude that batching objects together for rendering as much as possible (reducing the number of DrawCalls) plays a very important role in rendering optimization.

Commonly used batch technology

First, let’s look at a concept referred to as “can be batched.” We call objects that use the same material and a batchable rendering component (such as MeshRenderer) “batchable.” The objects to be batched must first meet the conditions of “can be batched”.

Commonly used technical batching methods include static batch batching, dynamic batch batching, and GPU Instancing batch batching. Static batching and dynamic batching requirements: Models can be different, but the material must be the same render object, while GPU Instancing batching requires both the model and the material must be the same render object.

Static batch

Static batching is to re-merge the meshes that can be batched objects in advance to generate a large new mesh according to its position and then draw it. Since these objects meet the batching conditions, they are all the same shader, so to render these objects, as long as the new merged mesh object is submitted to the GPU at one time, the batching of these objects can be displayed, and the DrawCall can be reduced.

But static batching also has its disadvantages:

-

Static batching requires pre-computing the merged grid, which increases the time for running initialization.

-

Static batching Once the merged mesh is precomputed, the objects can no longer “move.” Therefore, static batching is unsuitable for frequently moving objects, so dynamic batching can be used for moving objects.

-

Static object meshes may increase memory overhead after merging.

Some friends may have doubts about the third point above: Assuming that there is mesh data of 100 objects, the mesh data of 100 objects is still the mesh data of 100 objects. Why would there be an overhead memory increase after merging?

First of all, the word “may” means that it will increase in some cases, and it will not increase in some cases. Imagine that if 100 objects are entirely different, then the memory overhead of the merged Mesh vertices is the same as before the merge. Still, if the Mesh of 100 objects is precisely the same, there will be 100 different Meshes after the merge. If the vertex data of the position is removed, this merging will increase the memory overhead. Therefore, in actual game development, we do not use static batching when doing scenes like forests (with many identical trees).

Dynamic batching

Dynamic batching means that before each rendering, the CPU calculates the world coordinates of each vertex of the objects that can be batched (model vertex coordinates * world change matrix) and submits it to the GPU, and then the world matrix uses the unit matrix to come to achieve a batch effect. Dynamic batching is suitable for moving objects without incurring additional memory overhead.

However, because the CPU has to recalculate the coordinates before each rendering, dynamic batching will increase the burden on the CPU. In actual use, a trade-off should be made between the additional overhead of the CPU and the improvement brought by batching. Therefore, dynamic batching is not a panacea, and it is not suitable for rendering objects with too many vertices.

GPU Instancing batch

For N instances of the same object in the game scene, the GPU Instancing batching technology can be used. Its principle is to submit the model of the object once and then submit the instance’s position, rotation, scaling, and other information to the GPU, and then the GPU draws N instances.

From a technical point of view, GPU Instancing is an excellent batching method, which brings almost no additional overhead. However, GPU Instancing requires Shader support, and some early graphics cards do not support GPU Instancing features.

Optimize DrawCall for 3D objects

After understanding the principles and advantages, and disadvantages of several batching technologies, you can consider using the corresponding batching technology for batching. But before that, there are two critical points before us:

-

Analyze where DrawCall is consumed;

-

Create conditions for making objects as “batchable” as possible.

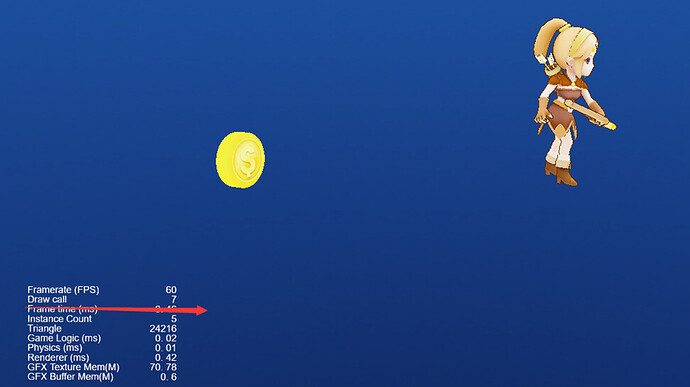

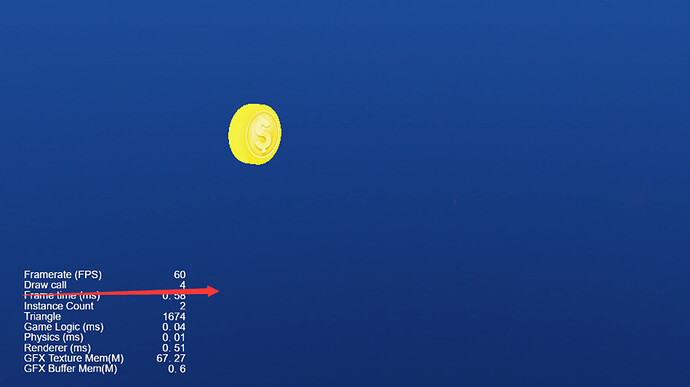

How can you determine how many DrawCalls are consumed by drawing a specific object? Generally, we will judge the analysis estimate by the organization of the scene and confirm our analysis by showing/hiding objects to see the change in the number of DrawCalls. As shown in the following example, after the character is hidden, the DrawCall changes from 7 to 4, indicating that the character drawing takes 3 DrawCalls.

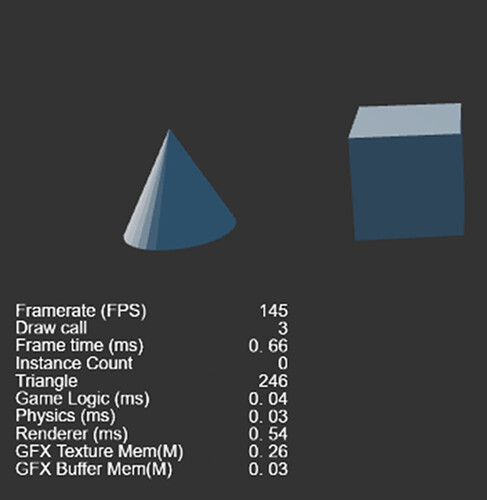

Show player

Hidden Player

To make it possible for more objects to be merged, I usually take to merge the shaders of multiple objects as much as possible. The shader mainly contains Shader+texture. We should use the same shader for as many objects as possible and then combine the textures of different objects together so that these objects use the same shader. In addition, it is necessary to change the rendering component type to allow more objects to meet the rendering components supported by the batch, such as changing the SkinnedMeshRenderer component into a MeshRenderer component.

After knowing the distribution of DrawCall and creating the conditions for batching as much as possible, let’s look at how to implement these batching technologies in Cocos Creator 3.x.

Static batch

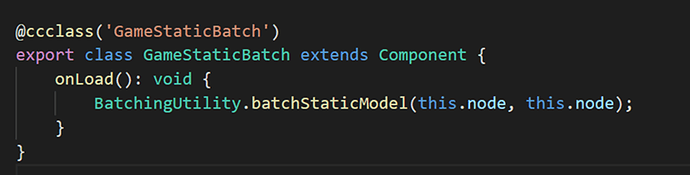

Put the objects that need to be statically batched under a node, call the static batching API interface in the initialization interface, and pre-calculate the newly batched objects to achieve batching.

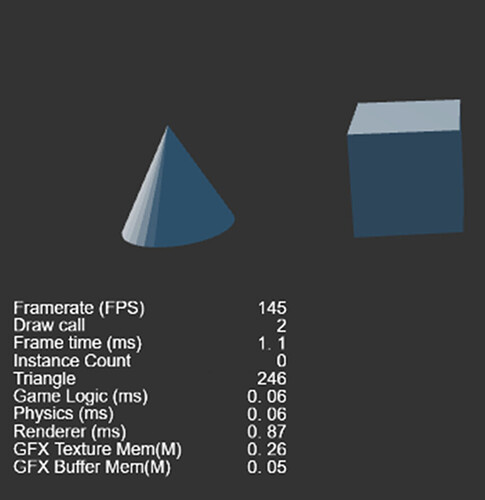

Take the following example “1 cone + 1 cube.” First, pre-calculate the new Mesh of all objects under the node during initialization. It can be seen that 2 DrawCalls are required before static batching is turned on, and only 1 DrawCall is required after turning on, saving 1 DrawCall.

Before opening

After opening

Dynamic batching

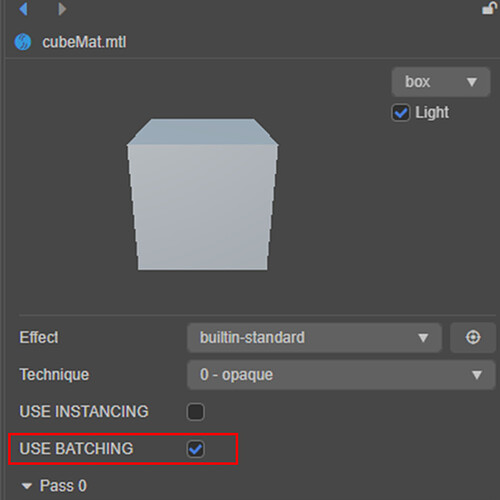

Dynamic batching only needs to check USE BATCHING on the shader:

GPU Instancing batch

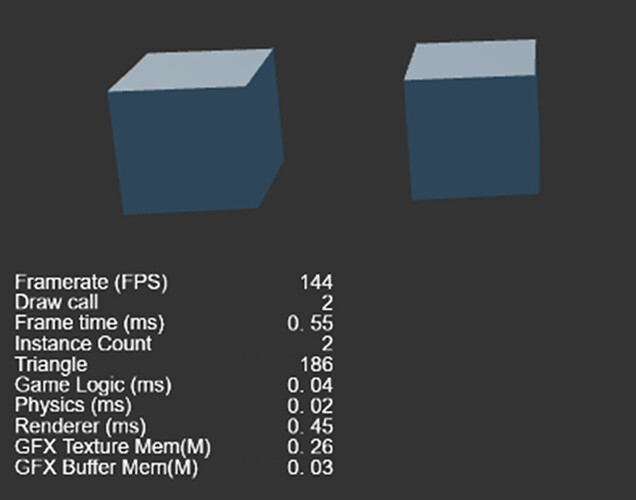

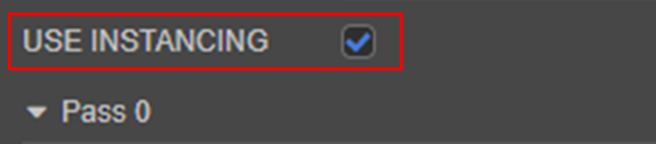

The same is true for GPU Instancing batching. Just check USE INSTANCING on the shader:

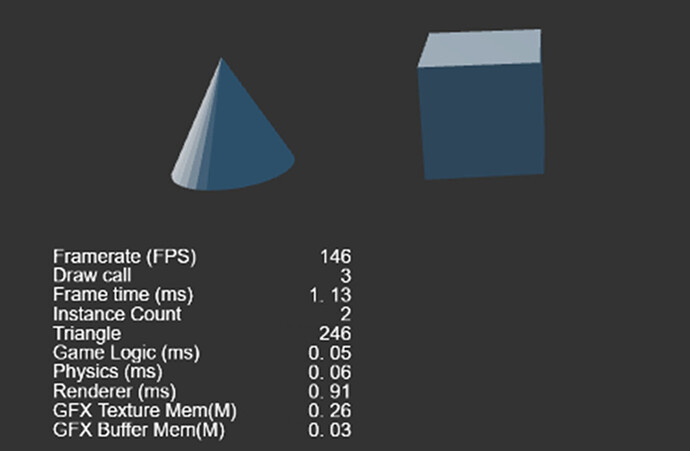

However, since GPU Instancing is only useful for multiple instances of a Mesh, there are still 3 DrawCalls acting on "1 cone + 1 cube ", but if it is “2 cubes”, it will be merged into 1 DrawCall after GPU Instancing is turned on.

"1 cone + 1 cube " is not the same mesh object and cannot be batched together

"2 cube " is the same mesh object and can be batched together

Optimize the 2D UI object DrawCall

2D is a special kind of 3D, so the batching method of the above 3D part is also applicable to 2D.

2D DrawCall optimization is more straightforward. As long as UI elements use the same atlas (same texture), they can be batched together. The Shaders used by UI elements all use the Buildin-Sprite Shader (including Label also uses this Shader), so whether the “can be batched” depends on whether the UI objects are the same atlas.

As for the Label batch, the engine will automatically generate it, which can be understood that the Label batch and the sprite batch are not the same batch. If the text cache mode is enabled, several different Label texts may be generated by the engine to the same set.

The core of 2D UI DrawCall optimization is 3 points:

-

Try to type UI elements of the same interface into the same set.

-

Optimize the 2D node organization hierarchy, UI is rendered according to the hierarchy, try to prevent UI elements of different sets from disrupting each other and interrupting Label. For example, organize UI elements as follows: A1A2A3/B1B2B3/C1C2C3…A/B/C different sets are put together, avoid organizing them in such a way as A1B1C1A2B2C2A3B3C3… UI elements or sets.

-

Note the combined batch of UI objects that Label disrupts.

Today’s sharing is here, I hope it can be helpful to everyone! You are welcome to the forum to discuss and exchange idesas together at: